All These Worlds Are Yours

Transcript of my keynote talk for Mind The Product, 19 Nov 2020. Rerecorded HD video above (37 minutes).

1 · utopian visions

If you’ll indulge me, I’d like to begin with some shameless nostalgia. Let’s step back a decade, to the heyday of techno-utopia.

Back in 2010, the mobile revolution had reached full pace. The whole world, and all its information, were just a tap away. Social media meant we could connect with people in ways we’d never dreamt of: whoever you were, you could find thousands of like-minded people across the globe, and they were all in your pocket every day. People flocked to Twitter, reconnected with old friends on Facebook. Analysts and tech prophets of the age wrote breathless thinkpieces about our decentralised, networked age, and promised that connected tech would transform the power dynamics of modern life; that obsolete hierarchies of society were being eroded as we spoke, and would collapse around us very soon.

And sure enough, the statues really did start to topple. The Arab Spring was hailed, at least in the West, as a triumph of the connected age: not only did smartphones help protesters to share information and mobilise quickly, but this happened in – let’s be honest – places the West has never considered technically advanced: Egypt, Tunisia, Libya, the Middle East… What a victory for progress, we thought. What a brave affirmation of liberal democracy, enabled and amplified by technology!

Still, there were a few dissenting voices, or at least a few that were heard. Evgeny Morozov criticised the tech industry’s solutionism – its habit of seeing tech as the answer to any possible problem. Nicholas Carr asked whether technology was affecting how we thought and remember. And growing numbers of users – mostly women or members of underrepresented minorities – complained they didn’t feel safe on these services we were otherwise so in love with.

But the industry wasn’t ready to listen. We were too enamoured by our successes and our maturing practices. Every recruiter asked candidates whether they wanted to change the world; every pitch deck talked about ‘democratising’ the technology of their choice.

We knew full well technology can have deep social impacts. We just assumed those impacts would always be positive.

Today, things look very different. The pace of innovation continues, but the utopian narrative is gone. You could argue the press has played a role in that; perhaps they saw an opportunity to land a few jabs at an industry that’s eroded a lot of their power. But mostly the tech industry only has itself to blame. We’ve shown ourselves to be largely undeserving of the power we hold and the trust we demand.

Over the last few years, we’ve served up repeated ethical missteps. Microsoft’s racist chatbot. Amazon’s surveillance doorbells that pipe data to local police forces. The semi-autonomous Uber that killed a pedestrian after classifying her as a bicycle. Twitter’s repeated failings on abuse that allowed the flourishing of Gamergate, a harassment campaign that wrote the playbook for the emerging alt-right. YouTube’s blind pursuit of engagement metrics that lead millions down a rabbit hole of radicalisation. Facebook’s emotional contagion study, in which they manipulated the emotional state of 689,000 users without consent.

The promises of decentralisation never came true either. The independent web is dead in the water: instead, a few major players have soaked up all the power, thanks to the dynamics of Metcalfe’s law and continued under-regulation. The first four public companies ever to reach a $1 trillion valuation were, in order, Apple, Amazon, Microsoft, and Alphabet. Facebook’s getting close. Today, 8 of the 10 largest companies in the world by market cap are tech firms. In 2010, just 2 were.

It seems we’ve drifted some way from the promised course. Our technologies have had severe downsides as well as benefits; the decentralised dreams we were sold have somehow given way to centralised authority and power.

2 · slumping reputation

For some time, though, it wasn’t actually clear whether the public cared. Perhaps they were happy with dealing with a smaller number of players; perhaps they viewed these ethical scandals as irrelevant compared to the power and convenience they got from their devices?

Well, it’s no longer unclear.

Here’s some data from Pew Research on public attitudes toward the tech sector. It’s worth noting just how high the bar has been historically. The public has had very positive views of the industry for many years: there’s been a sort of halo effect surrounding the tech sector. But in the last few years there’s been a strong shift, a growing feeling that things are sliding downhill.

An interesting feature of this shift is that we see this sentiment across both political parties. We’ve heard a lot recently, particularly in the US, about how social media is allegedly biased against conservative viewpoints, or how YouTube’s algorithms are sympathetic to the far-right. But it seems hostility to the industry is now coming from both sides: perhaps this isn’t the partisan issue we’re told it is.

A common theme behind this trend is that people are mostly concerned about technology’s effects on the fabric of society, rather than on individuals.

This study from doteveryone captures the trend: the British public, in this case, feel the internet has been a good thing for them as individuals, but say the picture’s murkier when they’re asked about the impacts on collective wellbeing.

At the heart of this eroding confidence is an alarming lack of trust. In the same study, doteveryone found just 19% of the British public think tech companies design with their best interests in mind. Nineteen percent!

Frankly, this is an appalling finding, and one that should humble us all. But it also suggests a profound dissonance in how the public approaches technology. People are still buying technology, after all: the tech sector is doing well despite the Covid crash, and stocks are up significantly. The public clearly still finds technology useful and beneficial, but the data suggests people also feel disempowered, resigned to being exploited by their devices. It’s as if the general public loves technology despite our best efforts.

We’ve all witnessed this through the anecdotal distrust we see all around us. We all have a friend who’s convinced that Facebook is listening through their phone, that apps are tracking their every move. We all see this learned helplessness around us: there’s nothing I can do about it, so why fight it?

The other way this bubbles to the surface is through the dystopian media that’s sprung up around the topic. In particular, I’d point to two Netflix productions. Black Mirror is one, of course. It’s captured the public imagination through an almost universally grim depiction of technologised futures: as a collective work of dystopian design fiction it does its job admirably.

And then there’s The Social Dilemma, the recent documentary featuring Tristan Harris and other contrite Silicon Valley techies. It’s fair to say it’s not been well received in the tech ethics community: to be candid, the film’s guilty of the same manipulative hubris it accuses the industry of. But the fact a documentary like this got made – and has been widely quite successful – suggests the public’s starting to see technology as a threat, not just a saviour.

3 · dark futures

The risk is, of course, that things get even worse. The decade ahead of us could well unleash deeper technological dangers. Certainly we’ll see disinformation and conspiracy playing a deeper role in social media, particularly with the advent of synthetic media – deepfake computer-generated audio and video, in other words – that blur the line even more between what seems real and what is real.

Right now we think of facial recognition mostly as a tool for personal identification – unlocking our phones, focusing cameras, tagging friends on nights out. But facial recognition is already wriggling beyond this personal locus of control. It’s going to colonise whole cities, and in turn pose quite serious threats to human rights.

Once you can identify people at distance and without consent, you can also map out their friendships, and assemble a ‘hypermap’ of not just their present movements, but also their past actions, from video footage recorded maybe years ago. It’s a short enough step from there to an automated law enforcement dragnet: a list of anyone who fits a certain description in a certain time and place, issued to anyone with a badge and an algorithm.

There are already movements underway to ban police and governments from using facial recognition on the general public: these might be successful in some cities or countries, but that battle will have to be won over and over again with each new terrorist attack. And authoritarian regimes won’t show the same sort of restraint that liberal states might.

As more companies and states put faith in artificial intelligence, we’ll also see more algorithmic decisions. Although as a community we’re starting to wise up to the dangers of algorithmic bias, it’s still likely that the people commissioning these systems will see them as objective, neutral, infallible tellers of truth. Even if that were true – which, of course, it’s not – citizens will rightly start to demand that these systems should explain the decisions they take. That’s tough luck for anyone relying on a deep learning system, which is computationally and mathematically opaque thanks to its design. I’m not sure we’ll be happy to sacrifice that power to satisfy what might seem like a pedantic request. But I’d argue perhaps we should: surely an important right within a democracy is to know why decisions are taken about you?

My biggest worry is when algorithmic decisions creep into military scenarios. Autonomous weapons systems are enormously appealing in theory: untiring, replaceable, scalable at low marginal cost. They could also cause carnage in ways obvious to anyone who’s watched a science fiction film since, what… 1968? There are some attempts to ban autonomous weapons too; the countries dragging their heels are pretty much the countries you’d expect.

2020 was, at last, the year the 21st century lived up to its threats. It was the first year that didn’t feel like an afterbirth of the 19-somethings; a year in which historic fires burned, racial tensions ignited, and an all-too-predictable and -predicted pandemic exposed just how ready some governments are to sacrifice their citizens for the good of the markets.

The coming decade might be worse still. I appreciate we’re all feeling temporarily buoyed by welcome uplifts at the tail-end of a dreadful year – vaccines around the corner, a disastrous head of state facing imminent removal – but the fundamental rot hasn’t been addressed. Deep inequality is still with us; automation still threatens to uproot our economies and livelihoods; vast climate disruption is now guaranteed: the only unknown is how severe that disruption will be.

But let’s take a breather: I don’t want to collapse into dismay. Let’s come back to the issues we control: the fate of the technology industry. How did we get here? What went wrong?

4 · responsibility as blame

I’ve been in the field of ethical and responsible technology for maybe four or five years now, after fifteen as a working designer in Silicon Valley and UK tech firms. If I may, I’d like to share one of the patterns I’m most confident about, having seen it repeatedly during that time.

Product managers are the primary cause of ethical harm in the tech industry.

It’s a blunt claim, and perhaps not a popular claim to make at a huge product conference. I feel I need to offer some caveats, or partial excuses. Maybe that old classic – some of my best friends are product managers. I’m even married to one. But there’s a more important point: this pattern is not intentional.

I’d be lying if I said I’ve never met a PM who relishes acting unethically: sadly, I have met one or two. But the vast majority of PMs – the ones who don’t demonstrate borderline sociopathy – mean well, and want to do well. Many of them have been reliable, valuable partners of mine in flourishing teams. Many of them care deeply about ethics and responsibility and want to take these issues seriously.

But I still see teams taking irresponsible decisions with damaging consequences. I think it happens because these consequences are unfortunate by-products of the things the product community values, the way PMs take decisions, and the skewed loyalties I think this field has adopted.

5 · empirical ideologies

Let’s talk first about Lean. I remember shortly after Eric Ries’s book, The Lean Startup, came out: every company I spoke with thought they were unique in adopting Lean methods. Now everyone does. Lean isn’t just a set of methods any more: it’s become an ideology. And the problem with ideologies is they’re pretty hard to shift.

One of the central precepts behind Lean Startup is that we now live in a state of such constant flux and extreme uncertainty that prediction is an unreliable guide.

‘As the world becomes more uncertain, it gets harder and harder to predict the future. The old management methods are not up to the task.’ —Eric Ries.

Instead, we should put our faith in empirical methods: create a hypothesis, then build, measure, learn, build, measure, learn. It’s all about validating your assumptions through a tight, accelerated feedback loop.

Makes sense. As a way to reduce internal waste and to stagger your way to product-market fit, I can see the appeal. But I also think there’s a major flaw in this way of thinking: it leaves no space, no opportunity to anticipate the damage our decisions might cause. It abandons the idea of considering the potential unintended consequences of our work and mitigating any harms.

It brings to mind that phrase that’s come to haunt our industry: move fast and break things. Breaking things is fine if you’re only breaking a filter UI. It’s not fine if you’re breaking relationships, communities, democracy. These pieces do not fit back together again in the point release. This idea of validated learning and incremental shipping has caused teams to casually pump ethical harm in the world, to only care about wider social impacts when they have a post-launch effect on metrics.

6 · overquantification

Believers in Lean ideologies also tend to have a strong bias toward metrics. and the belief that the only things that matter are things that can be measured. Numbers are valuable advisers but tyrannical masters. This leads to a common PM illness of overquantification, a disease that’s particularly infectious in data-driven companies.

Overquantification is a narrow, blinkered view of the world, and again one that makes ethical mistakes more likely. Ethical impacts are hard to measure: they’re all about very human and social qualities like fairness, justice, or happiness. These things don’t yield easily to numerical analysis. That means they tend to fall outside the interests of overquantified, data-driven companies.

I think a lot of people think Lean offers us a robust scientific method for finding product-market fit. I’d say it’s more a pseudoscience, but ok. It makes sense, then, that this idea of validated learning lends itself very well to experimentation: in fact, Lean Startup says that all products are themselves experiments. So a lot of Lean adherents rely heavily on A/B and multivariate testing as a way to tighten that feedback loop.

Product experiments can be a useful input to the design process; they can help you learn more about which approaches are successful, they can help you optimise conversion rates, and all that. But I’ve also witnessed companies where the framing of experimentation shifts. Instead of A/B and multivariate tests providing data points for validated learning, they start to become tools of behaviour manipulation. Without realising it, teams start talking about users as experimental subjects; and experiments become about find the best way to nudge or cajole users to behave in ways that make more money.

If you’re in this state – when you start thinking of users as masses, as aggregate red lines creeping up Tableau dashboards – you’re already beyond the ethical line. When you start thinking of people as not ends in their own right, but as means for you to achieve your own goals, you’re already in the jaws of unethical practice.

7 · business drift

I think we can trace the root of this tendency to a shift in the priorities of Product teams.

I’m sure most of us are familiar with this Venn diagram: I knew of it long ago; it was only very recently I found out it was created by Martin Eriksson himself.

This diagram shows Product managers sitting at the intersection of UX, tech, and business. I like it as a framing. It’s a hell of a lot better, for example, than that arrogant trope about PMs being the CEO of a product. But what I’ve observed, though, is this isn’t really what happens. Or perhaps it once used to, but there’s been a drift.

Far too many product managers have drifted into the lower circle. They’ve become metrics-chasers, business-optimisers, wringing every last drop of value out of customers and losing sight of this more balanced worldview.

I can understand this drift. Being on the business’s side is a comfortable place to be. You’ll always feel you have air cover, always feel supported by the higher-ups, always feel safe in your role. And you’ll also be biasing your team to cut ethical corners to hit their OKRs.

8 · user-centricity causes externalities

One more root cause to mention, and this one isn’t just about product managers, but designers too. To realise lasting business value, we’re taught we have to focus with laser precision on the needs of users.

This, too, has to change. We’re starting to learn, later than we should have, that user-centred design doesn’t really work in the twenty-first century. Or at least, it has significant blindspots.

The problem with focusing on users is our work doesn’t just affect users. The biggest advantage digital businesses have is scale: they can grow to serve huge numbers of customers at very low marginal cost. It’s not that much more expensive to run a search engine with 1 billion users than 1 million users. Create a social platform that catches fire and you might find yourself with 100 million users in a matter of a few months.

We’re now talking about global-scale, human-scale impact. Technologies of this scale don’t just affect users; they also affect non-users, groups, and communities. If you live next door to an Airbnb, your life changes, likely for the worse. Your new neighbours won’t care so much about the wellbeing of your community; they’ll be more likely to spend their money in the tourist traps than in the local small businesses; and, of course, their presence pushes up rents throughout the neighbourhood.

From a UX and product point of view, Airbnb is a fantastic service. It’s a classic two-sided platform that connects user groups for mutual gain. But all the costs, the harms, the externalities fall on people who haven’t used Airbnb at all: neighbours, local businesses, taxpayers… User-centricity has failed these people. Product-market fit has caused them harm.

Large-scale technologies don’t just affect groups of people. They also affect social goods: in other words, concepts we think are valuable in society. There’s been a lot of talk about how Facebook, for example, has torn the fabric of democracy. Some sociologists and psychologists say Instagram filters could damage young people’s self-image. These values simply aren’t accounted for in user-centred thinking: we see them as abstract concepts, unquantifiable, out of scope for tangible product work.

And then there’s non-human life, which our current economic models see just as a resource awaiting exploitation, as latent value ready for harvest. Humans have routinely exploited animals in the name of progress. Think of poor Laika, sent to die in orbit, or the stray animals Thomas Edison electrocuted to discredit his rivals’s alternating current.

Alongside this, there’s the very health of the planet. The news on climate crisis is so terrifyingly bad, so abject, that it’s immoral to continue to build businesses and to design services that overlook the importance of our shared commons. Climate is the moral issue of our century: there’s no such thing as minimum viable icecaps.

So even if product managers position themselves at the heart of UX, tech, and business, they may well be missing their moral duties to this broader set of stakeholders, to non-users, to groups and communities, to social structures, nonhuman life, and our planet itself.

9 · it doesn’t have to be this way

I’ve been talking about some dark futures for technology and for our world. The good news is that it doesn’t have to be this way. One of the things any good futurist will tell you is that the future is plural. It’s not a single road ahead: it’s a network of potential paths. Some are paved, some muddy; some are steep, some are downhill. But we get to choose which route we take.

Sometimes I’m sharply critical of our industry, but please don’t misunderstand me. I truly believe technology can improve our world, can improve the lot of our species. Technology can help bring about better worlds to come. If I didn’t believe that, I wouldn’t still be doing this work.

What it will take, however, is for us to reevaluate our impacts along new axes: to actively seek out a more ethical, more responsible course.

In retrospect, we’ll look back on 2020 as a pivotal year. I’m sceptical of some of the grand narratives people offer about the post-Covid world, but I do think it’s true that the deck of possible futures has been thoroughly shuffled this year. We now have the opportunity to choose new directions. We might not get a reset of this magnitude again.

And I want you – the product community – to lead this charge. I expect it’s hard to appreciate this from the inside, but you all hold an immense amount of power within technology companies, and within the world. You are the professionals whose decisions will shape our companies and products; your decisions will change how billions of future users interact with technologies and, by extension, with each other. I want you to exercise that power with thought and compassion.

If this is going to happen, you need to rethink what you value; what drives your processes and decisions. That’s, by necessity, a long journey. It’ll involve lots of learning, plenty of failure. For the rest of my time here, I’d like to suggest some first steps.

Here’s my fairly crude attempt to illustrate a responsible innovation process; let’s step through what it might mean for you.

10 · carving out space for ethical discussion

Perhaps most importantly, teams need to make space in their processes for ethical deliberations, to examine potential negative impacts, and look for ways to fix them before they happen. It doesn’t really matter when this takes place. You could adapt an existing ritual: a design critique, a sprint demo, a retro, a project pitch. Or maybe you ring-fence time some other way. But you must try.

When you do this, be prepared: someone will tell you it’s a waste of time. People use technologies in unpredictable ways, they’ll say: we can’t possibly foresee all of those. They might even quote the ‘law of unintended consequences’ at you, which says pretty much the same thing: you’ll always miss something.

Don’t listen. It is true that you’ll never foresee all the consequences of your decisions. But anticipating and mitigating even a few of those impacts is far better than doing nothing. And moral imagination, as we’d call it, isn’t a gift that only a few lucky souls have: it’s more like a muscle. It gets stronger with exercise. Maybe you only anticipate 30% of the potential harms this time around. Next time it might be 40%. 50%.

The easiest way to get started on this is a simple risk-mapping exercise: a set of prompt categories and questions you can run through to identify potential trouble spots. I recommend using the Ethical Explorer toolkit as a starting point; it’s been made by Omidyar Network for exactly this use case. It’s free and doesn’t need any special training to use.

11 · getting out of the building

Eventually you’ll realise this isn’t enough of course. Trying to conjure up potential unintended impacts from inside a meeting room or a Zoom call is worth doing, but is always going to be limited. Soon enough you’ll want to get out of the building.

You know this idea from customer development, of course. You know that talking with real people opens your eyes to new perspectives like nothing else can – that it helps you move from empathy to insight. So talk to your researchers about ways to better understand ethical impacts. I promise they’ll be delighted to help you: anything that’s not another usability test will make their eyes light up.

But remember here, it’s not just about individual users: it’s worth broadening your research to hear from other stakeholders. So look for ways to give voice to members of communities and groups, particularly if they’re usually underheard in this space. Reach out to activists and advocates. Listen to their experiences, understand their fears; work with them to prototype new approaches that reduce the risks they foresee.

12 · learning about ethics

Spotting potential harms in advance is a great start, but you then need to assess and evaluate them. Which are the problems that really matter? What do we consider in scope, and what’s outside our control? How do we weigh up competing benefits and harms?

There’s plenty of existing work to help us answer these questions. Plenty of people in the tech industry share an infuriating habit: they believe they’re the first brave explorers on any new shore. But this isn’t a topic to invent from scratch. There’s so much we can, and should, learn from the people already in this space.

When I moved into the field of ethical tech, around five years ago, I was stunned by the depth and quality of work that was already going on behind the industry’s back. Ethics isn’t about dusty tomes and dead Greeks! It’s a vital, living topic, full of artists, writers, philosophers, and critics, all exploring the most important questions facing us today: How should we live? What is the right way to act?

There’s a happening in this movement, and a lot to learn. Some people think ethics is fuzzy, subjective, difficult. If you take the time to deepen your knowledge, you’ll realise this isn’t really true. There’s strong groundwork that’s already been laid: there are robust ways to work through ethical problems. You can use these to take defensible decisions that make your team proud.

13 · committing to action

You then have to commit to action. There’s a sadly common trend these days of ethics-washing – companies going through performative public steps to present a responsible image but unwilling to make the changes that really matter. An ethical company has to be willing to take decisions in new ways, to act according to different priorities, if you’re going to live up to these promises.

Maybe it’s something simple, like committing as a team to never ship another dark pattern. Maybe it’s ensuring your MVP includes basic user safety features, so you reduce the risk that vulnerable people are harassed or attacked.

I particularly want to mention product experimentation here. It astounds me how ethically lax our industry is when it comes to experimentation. Experimentation and A/B testing is participant research! In my view, this means you have a moral obligation to get informed consent for it. So tell customers that A/B tests are going on. Let people find out which buckets they’re in and, crucially, offer them a chance to opt out. Be sure that you remove children from experimental buckets.

14 · positive opportunities

But this step isn’t just about mitigating harms. One welcome trend I’ve seen is that the conversation about ethics is moving beyond risk. Companies are starting to realise that responsible practices are also a commercial advantage.

Jonathan Haidt, who’s a professor of business ethics at NYU, has found that companies with a positive ethical reputation can command higher prices, pay less for capital, and land better talent.

There’s plenty of evidence that customers want companies to show strong values. Salesforce Research found 86% of US consumers are more loyal to companies that demonstrate good ethics. 75% would consider not doing business with companies that don’t.

So this stage also means capitalising on opportunities you spot in your anticipation work. This means thinking differently about ethics.

I think too many people see ethics as a negative force: obstructive, abstract, something that be a drag on innovation. I see it differently. Yes, ethical investment will help you avoid painful mistakes and dodge some risks. But ethics can be a seed of innovation, not just a constraint.

The analogy I keep returning to is a trellis. Your commitment to ethics builds a frame around which your products can grow. They’ll take on the shape of your values. Your thoughtfulness, your compassion, and your honesty will reveal themselves through the details of your products.

And that’s a powerful advantage. It’ll help you stand out in wildly crowded marketplace. It can help you make better decisions, and build trust that keeps customers loyal for life.

As this way of thinking become more of a habit, you need to support its development. A one-off process isn’t much good to anyone: you need to build your team’s capacity, processes, and skills, so responsible innovation becomes an ethos, not just a checklist.

So you need to spend some time creating what I call ethical infrastructure. This might include publishing a set of responsible guidelines for your team or your company. Maybe it’s including ethical behaviours in career ladders. Perhaps in some cases you’ll want to create some ethical oversight – a committee, a team, or at least a documented process for working through tough ethical calls.

It’s easy to go too quickly with this stuff, and build infrastructure you don’t yet need. Keep it light. Match the infrastructure to your need, to your team’s maturity with these issues. If you keep your eyes open, it’ll soon become clear what support you need.

15 · close

These are still pretty early days for the responsible tech movement. We may be stumbling around in the gloom, but we’re starting to find our way around. Personally, I find it thrilling – and challenging – to be in this nascent space. Something exciting is building: maybe there’s hope for an era of responsible innovation to come.

Because we’re starting to realise we won’t survive the 21st century with the methods of the 20th. We’re beginning to understand how user-centricity has blinded us to our wider responsibilities; that the externalities of our work are actually liabilities. Business are learning they have to move past narrow profit-centred definitions of success, and actively embrace their broader social roles.

After all, we’re human beings, not just employees or directors. Our loyalties must be to the world, not just our OKRs.

Wherever it is we’re headed, we’ll need support, courage, and responsibility. My product friends, you have a power to influence our futures that very few other professions have. All these worlds are yours: you just have to choose which worlds you want.

Norman on ‘To Create a Better Society’

Don Norman’s latest piece ‘To Create a Better Society’ has me rather torn.

First, I worry about the view that designers should be the leaders on social problems. We can/should engage with these issues, but respectfully and collaboratively. We don’t need Republic-esque designer-philosopher-kings. Facilitation yes, domination no. I’m sure this is not Norman’s intent, but some of the language is IMO too strong.

Second, and more seriously, Norman has been hostile to people pushing some of these very ideas in recent years. They deserve credit for handling his attacks with dignity and (whether he appreciates it or not) ultimately shifting his perspectives.

That aside, a design luminary arguing that commercial priorities have pushed aside the topics of power, ethics, and ecology in design, and that design should become less Western-centric & colonial… well, we need more of that kind of talk.

Tips for remote workshops

Some lessons I learned from running five days of remote workshops.

Even more breaks than you think you need. Ten minutes every hour. Gaps between days, too. I couldn’t stomach the grisly prospect of Mon–Fri solid, so we ran Tue/Wed → Fri → Mon/Tue. Life-saving.

Use a proper microphone. My voice nearly gave out on day 1 because I hadn’t plugged in, and ended up resorting to my videocall voice. Wasn’t a problem once I dug the Røde out.

Use the clock for prompt time-keeping – ‘see you all at 11:05’ – and restart on the dot, so attendees know you mean it.

Be alert to, and accommodate, chat: my group fell into a great pattern of using it for non-interrupting questions.

Childlock everything in Miro. Lock and hide boards, pre-drag stickies. That thing’s only usable if you sharply reduce degrees of available freedom.

Set and enforce a ground-rule that any camera-visible cats must be introduced to the group. We stopped three times; one of the best decisions I made.

Anyway, it reminded me of Twitch streaming, except you can see the audience, and it’s less fun but far better paid. In all, I think our sessions went damn well. Would still far rather have done them in person, but we overcame some of my scepticism. A good group goes a long way.

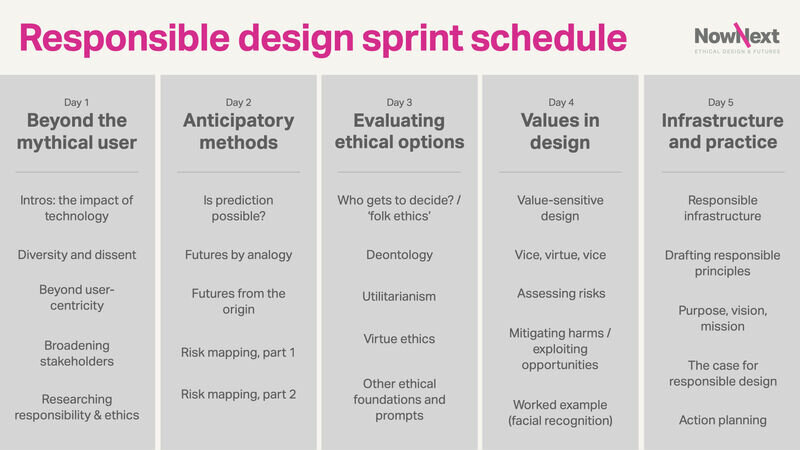

Responsible design sprint overview

Putting the finishing touches on a five-day responsible design training & co-creation sprint. Starts tomorrow. Here’s what we’ll be covering.

If this sounds like just the thing for your team too, let us know.

The limits of ethical prompts

Starting a big project next week, working as a designer-in-remote-residence, helping our client establish and steer a responsible design programme. Lots of planning and prep, including immersing myself in ethics toolkits, card decks, canvases etc to see what we could use.

Some of them are pretty decent, but I’ve found the significant majority are just lists of prompt questions, perhaps grouped into loose categories. Colourful, good InDesign work, but not much content beyond this.

Ethical prompt questions have their place: asking ourselves tricky questions is far better than not asking ourselves tricky questions. But we need to be sceptical of our ability to see our own blindspots. Answers to the question ‘Are we missing something important?’ will – you guessed it – often miss something important.

By all means use toolkits, ask ethical prompts. But be willing to engage with people outside the conference rooms too: people not like us; people more likely to experience the harms of technology. ‘We got ourselves into this mess, so it’s on us to get out of it’ is an understandable view, but can lead to yet more technocracy. Let’s bring the public into the conversation.

Book review: Building for Everyone

One of the most welcome trends in ethical tech is an overdue focus on a wider range of stakeholders and users: a shift from designing at or for diverse groups toward designing with them.

Building for Everyone, written by Google head of product inclusion Annie Jean-Baptiste, has arrived, then, at just the right time. The movement is rightly being led by people from historically underrepresented groups, and I’ve long had the book on pre-order, interested to read the all-too-rare perspectives of a Black woman in a position of tech leadership.

Jean-Baptiste’s convincing angle is that diversity and inclusion isn’t just a matter of HR policy or culture; it’s an ethos that should infuse how companies conceive, design, test, and deliver products. Make no mistake, this is a practitioner’s volume, with a focus on pragmatic change rather than, say, critical race theory. It’s an easy, even quick read.

It’s also a Googley read. While I have a soft spot for Google – or at least I’m easier on it than many of my peers – I find the company to have a curious default mindset: massive yet strongly provincial, the Silicon Valley equivalent of the famous View of the World from 9th Avenue New Yorker cover. True to form, Building for Everyone draws heavily on internal stories and evidence.

Google also has a reputation as an occasionally overzealous censor of employee output, and here the overseer’s red pen bleeds through the pages. The story told is one of triumph: an important grass-roots effort snowballed and successfully permeated a large company’s culture, and here’s how you can do it too. Jean-Baptiste deserves great credit for her role in this maturation and adoption, but the road has been rockier than Building for Everyone is allowed to admit. Entries such as ‘Damore, James’ or ‘gorillas’ – unedifying but critical challenges within Google’s inclusion journey – are conspicuously absent from the index.

This isn’t Jean-Baptiste’s fault, of course. An author who holds a prominent role in tech can offer valuable authority and compelling case studies. The trade-off is that PR and communications teams frequently sanitise the text to the verge of propaganda. Want the big-name cachet? Be prepared to sacrifice some authenticity. The book is therefore limited in what it can really say, and inclusion is positioned mostly as a modifier to existing practices: research becomes inclusive research, ideation becomes inclusive ideation, and so on.

This stance does offer some advantages. It scopes the book as an accessible guide to practical first steps, rather than a revolutionary manifesto. Building for Everyone seeks to urge and inspire, and does so. Jean-Baptiste skilfully argues for inclusion as both a moral and financial duty; only the most chauvinistic reader can remain in denial about how important and potentially profitable this work is.

Nevertheless, this is a book that puts all its chips on change from within. But is that ever sufficient? The downside to repurposing existing tech processes and ideologies is that in many cases those tech processes and ideologies are the problem: they are, after all, what’s led to exclusionary tech in the first place. At what point do we say the baby deserves to be ejected with the bathwater?

We need incrementalist, pragmatic books that win technologists’ hearts and minds, and establish inclusion as non-optional. If that’s the book you need right now, Building For Everyone will likely hit the spot. But we also need fiercer books that take the intellectual fight directly to an uncomfortable industry. Sasha Costanza-Chock’s Design Justice, next on my list, looks at first glance to fill that role, if not overflow it. More on that soon.

New article: ‘Weathering a privacy storm’

I’ve done a lot of privacy design work this year, much of it with my friends at Good Research. I worry many designers and PMs think privacy’s a solved problem: comply with regs, ask for user consent if in doubt, jam extra info in a privacy policy.

Nathan from Good Research and I analysed a recent lawsuit (LA v Weather Channel/IBM) and found privacy notices and OS consent prompts may not be the saviours you think they are. Not only that, but enforcement is now coming from a wider range of sources. Time to take things more seriously.

The Social Dilemma

I watched The Social Dilemma. At its worst moments it was infuriating: deeply technodeterministic, denying almost any prospect of human agency when it comes to technology – pretty much the identical accusation it makes of big tech.

As is common with C4HT’s work, it was either oblivious to or erased (which is worse?) the prior work of scholars, artists etc who warned about these issues long before contrite techies like the show’s stars – and I – came along.

As Paris Marx writes, it teetered on the brink of a bolder realisation, but was too awed by its initial premise to go there. And so it retreated into spurts of regulate-me-daddy centrist cop shit and unevidenced correlation/causation blurring.

But but but. I’m not the audience. I stress in my work that it can’t just be down to the tech industry to solve these problems. We have to engage the public, to explain to them what’s happening in their devices and technological systems. We have to seek their perspectives, request they put pressure on elected officials, and that they force companies to adopt more responsible approaches. If The Social Dilemma does that, perhaps that’s a small gain.

But, oof. If you’re in this field… pour a stiff drink before hitting Play.

A future owners test

A question we should ask more: ‘Is this technology safe in the hands of plausible future owners?’. Naturally, we assess ethical risk mostly in the present-day world, looking at today’s norms, laws, and policies. But things change.

Say you’re building a public-sector app or algorithm. The department you’re working for may have good protocols in place – regulations, perhaps, or internal processes – that give you confidence they’ll oversee this technology properly. But what about future governments? You’re hopefully building something that will stick around, and we live in unstable times. Would this system still be safe in the hands of a government pursuing different politics? A police state? An ethnonationalist government? An autocracy? Policies and laws can be overturned; you might be relying on protections a future authority could easily revoke.

Same goes for commercial work too. There’s a hostile takeover of your company, or maybe it fails and its digital assets are snapped up in a fire sale, and suddenly your system belongs to someone else. Would it still be safe in the hands of a defence contractor? A data broker? Palantir?

Or perhaps the nature of your company itself shifts, and an initially benign use case takes on different colour when the company moves into a new sector…

I’d like to see more of this thinking – maybe we could call it the future owners test – in contemporary responsible tech work. We mustn’t get so wrapped up in today that we overlook tomorrow.

Endnote: the word ‘plausible’ is, of course, doing a lot of work. The depth of this questioning should be proportionate to the risk; many eventualities can be safely ignored in many contexts. I’m not worried about, say, the far-right getting their hands on Candy Crush data, but I sure would be if they inherited a national carbon-surveillance programme.

Read a little, write a little

One emotion I didn’t expect among this year’s avalanche: I feel blunted. I can’t find the words to describe things I care about; the ground is moving faster than my feet.

I’m old enough to know this usually means I haven’t been reading enough. Makes sense, with so many stresses to juggle, so much intellectual comfort food at easy reach. I’ve tapped two new lines – ‘Read a little / Write a little’ into my daily checklist, in an attempt to rehabituate myself and resharpen those edges. Which makes this day one of a new routine, I suppose.

Kelly Pendergrast’s Home Body is a curious essay, opening with womblike hauntings and cyborg urbanism, but settling on the habit infrastructure has of binding us all together, even when we feel most isolated. However, Pendergrast warns, we mustn’t fetishise the infrastructure itself at the cost of overlooking the social forces that shape and sustain it.

All the mapping and “making visible” in the world can’t right what’s wrong, and even the most good-faith attempts at rigorous transparency can’t avoid glossing over or eliding the horrors buried in global supply chains and local power structures.

It’s a weird, nuanced, challenging piece, and exactly the sort of thing I love Real Life Magazine for.

Future Ethics flash sale

I’m running a flash sale on Future Ethics. Digital bundle (Kindle, Apple Books, PDF) now $5; signed paperbacks £9 + postage (UK & Europe only). Reduced prices on the Bezos megastore too. Cheapest it’s ever been, but the sale will last to end of next week only.

I’ve also made an overdue preview available: you can now download chapters 1, 2, and 3 as a free, unwatermarked PDF. Dip your toes in, the water’s lovely, etc.

🤯👉-> https://nownext.studio/future-ethics <-👈🤯

Responsible design: a process attempt

The most common question I get on responsible design: ‘How do I actually embed ethical considerations into our innovation process?’ (They don’t actually phrase it like that, but you know… trying to be concise.)

Although I don’t love cramming a multifaceted field like ethics into a linear diagram, it’s helpful to show a simple process map. So here’s my attempt.

IMO the first step is to spend time trying to anticipate potential moral consequences of your decisions. Ignore those who tell you this is impossible: most technology firms have no muscles for this because they’ve never seriously tried to do it.

What we’re trying to do here is stretch our moral imaginations. This can involve uncovering hidden stakeholders and externalities that might befall them – to do that, we almost always have to think beyond narrow user-centricity and embrace wider inclusion. Anticipating moral implications is also made easier when we involve techniques from the futures toolkit, e.g. horizon scanning, scenario development, or speculative design.

Then we need a way to evaluate these impacts. Complex systems create competing consequences: how do we decide whether a benefit outweights a harm? Again, industry is inexperienced at this, so this usually devolves into an opinion-driven debate, won by the most senior voice.

But, of course, people have been doing the hard work for us these last couple of millennia. We can take advantage of the ethical theory they created to break past this flawed belief that ethics is subjective. We can use structured, robust methods to examine consequences and evaluate decisions rationally but compassionately, based on well-founded contemporary thinking, and hopefully fostering the wellbeing of all.

Having identified and evaluated potential consequences, we can now take action. If there’s potential harm, we can try to minimise it or design it out of the system before it happens.

But ethics isn’t just about stopping bad things happening: there’s a growing realisation that responsible design is also a seed of innovation, a competitive differentiator. So we use this evaluation to create new products and features with positive ethical impacts, too.

Underpinning all of this, we need ethical infrastructure. This is the lever most newcomers immediately reach for: a C-level appointment, a documented code of ethics, etc. And these things can have their place, for sure. But they’re there to support an ongoing process and culture of making good ethical decisions: they achieve virtually nothing on their own. So building this capacity is important, but is only effective if it’s deployed in parallel with proper decision-making processes like this.

After dread

A short talk / article written for the Global Foresight Summit 2020. Available below as embedded audio.

It took just a fortnight for ‘these uncertain times’ to become a cliché. Sharp discontinuities like this pandemic – let’s not call it a black swan, because it was both predictable and predicted – have a habit of injecting new terminology into public consciousness. This time around: social distancing, herd immunity, furloughed workers, and flattened curves. We shake our heads at the same press reports, archive those same panicked retailer emails, stare in disbelief at the same steepening graphs. It’s an unfortunate golden age for information design. If 2019 was the year of the algorithm, 2020 is surely the year of the logarithm.

But who’d have thought it? The shock of the new is that the new turned out to be… absence. With half the world in lockdown, there’s no movement, no commerce, no economy. Covid–19 has forced the shark of modern capitalism to stop swimming. And so the only persistent narrative of our lives collapses around us, and the void rushes in.

It’s in vacuums like this that fear begins to spread. With the peak still days away, we fear for our loved ones, our health workers, our jobs, and even our votes. As part of that much-discussed group, Team Underlying Conditions, I myself feel this fear acutely. And fear changes us. We start to see our fellow human as a potential enemy, a hostile vector, and this fear turns us against the world. It undermines neighbourhoods and communities. It sours us to humanity.

But, thinking about it, fear isn’t quite the right term. The mathematics of the situation are more about statistics than probabilities. It’s not fear: it’s something more like dread. What we feel isn’t just concern that bad things might happen: we know they’ll happen, that they’re happening right now. All we can do is hope the statistics end up being less awful than they might be. Covid–19 is a progressive illness: it’s the second week that kills you. Similarly, the stress of lockdown could easily cause a gradual worsening in our collective spirit. In our darkest times, it’s not just the virus we fear, but the future itself.

For many of us, particularly the sort of person who watches or gives talks about the future in their spare time, it’s a novel experience to live amid dread. Plenty of other folks, typically the ones society has trodden upon and discarded, would contend that dread is nothing new. But here the mathematics also dictate the eventual pattern, and reassure us this dread is only temporary. Exponentiality can’t last forever in a finite population. Soon, although nowhere near soon enough, we’ll reach a point of viral saturation, of immunity, or – if we’re lucky – develop a vaccine, at which point those terrible red lines will crawl back down those log plots. So we know this dread will recede in time, to be replaced by a complex mix of relief, guilt, and anger.

But there is another dread. Just like Covid–19, climate crisis is underpinned by scientific certainty. The punishment’s in the post; the only question is how bad things will get. And so the predominant emotion about climate is also not fear but dread.

Again, this is hardly news to a particular section of society. An entire generation is already grappling with climate dread, seeing the future not as a promise but as a threat. Their reward for their noble, sad defiance – school strikes and protests against the tide of certainty – is name-calling (‘snowflake’) and scorn from the generations that fiddled with their satnavs while the planet burned.

We have to be careful here not to overextend the comparisons between Covid–19 and climate crisis. It feels crass and insensitive, whataboutery at precisely the wrong time, and it would be a category error to claim that how we experience one will foretell how we experience the other. But it’s also a mistake to overlook that future horrors act as the backdrop to today’s dread. If we can learn anything about this crisis that alleviates the next, we must.

As the RSA’s Anthony Painter describes, this crisis gives us an opportunity to forge a bridge world that crosses some of the chasm between today’s rotten systems and the sustainable versions we’ll need to survive climate change. Similarly, Dan Hill points to weak signals, glimmers of more resilient futures amid the crisis.

Because as well as the very real horror, the mass slowdown caused by the virus is giving us a glimpse of something else, aspects of another green world just within reach.

And, of course, we have the memes. Dinosaurs return to Times Square; Nessie comes ashore at Inverness. Nature is healing, thanks to this overdue pause.

Where everyone seems to agree is that things can’t go back the way they were. As Fred Scharmen says, this isn’t the end of the world, but it’s certainly the end of a world. We live in an era of ‘in an era of’s: this crisis has struck at what we’re repeatedly told is a hugely vulnerable moment, when our trust in grand narratives, institutions, and expertise has collapsed. We’ve learned the economic recovery was built of balsa-thin gig-economy precarity, and authoritarians have exploited our democratic disquiet. So we conclude this dislocation has finally demolished the fragile walls of whatever came before; that our old presents and futures are now anachronisms.

‘Nothing could be worse than a return to normality. Historically, pandemics have forced humans to break with the past and imagine their world anew. This one is no different. It is a portal, a gateway between one world and the next.’ —Arundhati Roy

And so, in an era of narrative collapse, there’s a scramble for new narratives. Predictably, various interest groups are already jostling for position, trying to project their own blueprints onto the ruins. Far-right accelerationists see the virus as a perfect catalyst for collapse, intensified by prepper paranoia and racist conspiracy, and allowing a world of white supremacy to emerge from the rubble. Leftists, myself included, have found a new home for our common refrain: surely capitalism really is dying this time? Writing for Al-Jazeera, Paul Mason compares Covid–19 to the Black Death (a comparison not welcomed by all), and claims the panic measures governments and banks have resorted to are one-way valves; in other words, that a new economic world is already upon us. Economic pipe dreams have become immediate, urgent reality. With interest rates having never recovered from 07/08, the only lever left to pull was the one marked ‘cash’, in the form of massive quantitative easing and the closest thing to Universal Basic Income conservative governments could conceivably offer. Along the way, claims Mason, giants across numerous sectors will have to be nationalised – airlines, retailers, railways, insurers – all humiliating concessions for die-hard believers in the power of the free market.

It’s clear the role of the nation state is being transformed, although we must remember that in some countries – the United States, India, and Brazil, say – it’s only regional government that is holding society together in the face of federal denial and incompetence. And it’s likely the Overton window has already lurched left, as voters realise capitalism is now so fragile it has to be bailed out by socialism every decade or two. But I’m not convinced by the urge to stamp set-piece futures onto the blank canvas. Nor is Richard Sandford.

Are these futures right for the post-pandemic world? They were made before the current situation, after all, using the ideas and categories and levers that were in place before the virus spread. I think it’s worth asking whether the building-blocks of these ready-to-hand futures are going to be unchanged. […] There are some big changes underway that might mean we don’t value futures the way we do now, or at least that we might value different futures.

An even larger concern is how eagerly we believe the status quo will quit without a fight. Remember, just five years passed between the starry-eyed postcapitalism of Occupy and the jolts of Brexit and Trump. Old habits have a nasty tendency to return to claim their ancient territories; the industrial growth era may be in the ICU right now, but it’s desperate to make a full recovery, and has all the resources it needs to do so.

In times of dramatic flux, it’s hard to remember the old reality, but there’s a corollary: on the other side, it’s likely we’ll try hard to forget the crisis times. The world may well find itself so pleased to have survived through the present dread that it wilfully ignores the larger dread to come. After all, the politicians have promised us a V-shaped recession, suggesting a painless rebound to the way things were. It’s easy to imagine a developed world joyfully lapsing into comforting consumption, rebooking those cancelled vacations and finally upgrading the SUV as a reward for months of enforced temperance. To paraphrase Herbert Marcuse, whatever you throw at capitalism, it will absorb and sell back to you.

This pandemic has reshuffled the deck of probables, plausibles, and possibles. Foresight professionals know this crisis is urging us to reimagine healthcare, supply chains, and urban space. Many of us would agree that the airline industry, currently elbowing its way into the bailout queue, will rightly find its power diminished in the coming decades. Some of us would contend the ideology of eternal growth deserves some overdue competition from steady-state or degrowth perspectives. But it would be a huge mistake to think these preferable futures, however appealing, justifiable, or essential, will automatically come to pass. The moral course is never a given, and should doesn’t always translate to will.

Change happens where power meets appetite, but dread stems from a lack of agency: the future is only something to fear if we can’t influence it. And lack of agency is surely a hallmark of recent years. Networked technology, worshipping the twin gods of simplicity and magic, has indeed done simply magical things, but simultaneously eroded people’s understanding of their possessions and eroded their privacy alike. The tech giants, once seen as useful innovators, have become vast repositories of power and wealth. Economies have become riddled with complex tumours of creative accounting and financial obfuscation. Inequality grows, power centralises, information overloads, and people feel control over their destinies slipping away.

Is it any surprise, then, that some turn to conspiracy for comfort? 5G masts aflame, pizza restaurant death threats, anti-vax paranoia: all stem from a need to explain a world beyond our control. Populism is of course the political wing of conspiracy, a promise that there’s been a solution to the world’s problems all along, but the elites have kept it from you this whole time.

Conspiracy is in league with dread. It excuses people from contributing to the future: why bother, when invisible hands are pulling the strings above us? Conspiracy is a withdrawal from the future, an abandonment of any prospect of agency. Conspiracy and dread both anaesthetise; they conquer all ambition.

The privilege to talk about the shape of the future is just that: a privilege. Events like the Global Foresight Summit are understandable and probably welcome ways for us to discuss challenges ahead. But we must recognise that folks like us – whether we work in technology, foresight, or design – wield disproportionate influence over the future. Surely, then, an emerging responsibility of our collective fields is to ensure this power is better distributed in years to come? We have to re-engage the public in discussions about their futures; we need to restore some of the agency they have lost in recent years. We have to help forge a future beyond dread.

If the world should never return to normal, it follows that there should be no new normal for our fields, either. For too long we’ve been serving the wrong goals: helping large multinationals and tech giants accrue more power and wealth at the expense of other actors, contributing to the atomisation of society by designing products for individual fulfilment ahead of the wellbeing of our communities. Our rethought world will need to prioritise people and societies, ecologies and environments, ahead of profit and productivity. If you use this crisis to thought-prophesise about the new era ahead, don’t you dare return to your cosy consulting gig with Palantir or Shell afterward. Own your impact. Act in the interests of this better world you espouse, and withdraw your support for the forces that brought us to the brink.

We therefore have to rethink our metrics of success. Richard Sandford again:

‘[The old goal was] to claim new ground and set the agenda, working with the ways of managing attention that cultural commentary depends on now: a combination of posts on Medium, op-eds, twitter threads, podcasts, blog posts, articles in the Atlantic/Tortoise/Guardian/the Conversation/etc. The need to produce commentary to the rhythms established by this network favours publication over reflection. It’s hard to see how this will lead to the new way of doing things that is necessary for us to avoid returning to the old normal.

To me, the primary goal of the foresight community now should be to re-empower the people of the world; to use our skills to help people feel some agency in shaping their own futures. Right now, that means helping people out of helplessness. When the industrial growth era finally breathes its last – whether it’s killed by this pandemic or by climate crisis – we’ll need to mourn it. Those of us who believe in brighter futures to come should walk alongside others through this grieving process, helping them cross the familiar waters of denial, anger, bargaining, and depression. Only once the world learns to accept and understand the loss of this era can we work together to forge brighter eras ahead.

The counter to dread, we are told, is hope. Hope is another of those modern-day clichés, with climate literature in particular following a rigid template: first the doom, then the hope, carefully dosed and tempered by a demand for enormous urgency. Even then, we must be mindful and cautious that the hope we swear by might be unconvincing or invisible to others. Philip Marrii Winzer’s piece I’d rather be called a climate pessimist than cling to toxic hope makes the case for a community decimated by the modern age.

As Aboriginal people, we’ve been fighting the capitalist, colonial system that created this crisis for over 200 years. Forgive me if I find your brand of hope a little hard to swallow […] We know we’re in an abusive relationship with the institutions of power and capital that occupy our lands, but does the climate movement? Much of what the movement focuses its energy and resources on feels a lot like asking an abuser to change their ways.

To me, the message is clear: we will not succeed by simply evangelising our own paternalistic, privileged messages of hope upon others. We won’t convince others that we can conquer the climate crisis by pointing to our previous models of utopias yet unrealised. The only sustainable way to defeat dread is to give people the skills and the powers to forge their own preferable futures. Hope comes from communities, not from experts; it arises with empowerment and inclusivity, not the promises of politicians.

If we are at last able to banish the vestiges of the doomed status quo – as indeed we should – then we have a huge task ahead. Everything is now up for grabs. Our world will need root-and-branch reform, redesign, and reclamation. If we’re to prevent old inequities returning, and keep future generations out of the captivity of conspiracy and hopelessness, we need to force power and money to flow in new, sustainable directions through new, sustainable systems. We have centuries of effort ahead of us. After grief, after dread, comes the most important work of our lives.

Recent tech ethics reading

In support of a proposal and workshop I’m fleshing out, I’ve been back down the metaphorical tech ethics mines. They’re noisier now, and increasingly polluted, but there’s still some worthwhile nuggets to be found…

First: a WSJ interview with Paula Goldman, chief ethical and humane use officer at Salesforce. Advisory councils, employee warning systems, product safeguards. IMO Sf are near the forefront of Big Tech ethics in practice, so worth a read.

The Economist warns the techlash isn’t over, despite a Big Five 52% stock price surge. The next recession will kill jobs, with automation likely blamed. Regulatory experiments will coalesce, and resistance will grow to match tech’s ever-broader ambitions.

Johannes Himmelreich argues for political philosophy & a focus on pluralism, human autonomy, and qs of legitimate authority. Moral philosophy is individualised (‘how should I live?’), but this is really about how we should get along as a society.

‘Ethicists were hired to save tech’s soul. Will anyone let them?’ has done the rounds. Again, Salesforce-centric (recent Comms push?), but a fair piece acknowledging the realities of exec-level veto, the toothlessness of Yet More Card Decks, and IMO the tempting error of slotting ethics into existing practices.

Here’s Bruce Sterling, distrustful (as I am) of preemptive codes of ethics. ‘Technological proliferation is not a list of principles. It is a deep, multivalent historical process with many radically different stakeholders over many different time-scales.’

Tom Chatfield agrees. ‘We already know what many of the world’s most powerful automated systems want: the enhancement of shareholder value and the empowerment of technocratic totalitarian states.’ This is sociopolitical, not merely technological.

So is tech ethics a waste of time? LM Sacasas offers a welcome defence, arguing for a ‘yes and’ approach, pointing out that other courses of action – legal, political – still need ethical bases. Pull all the levers, as I often find myself saying.

Choose the interesting work

2020 is shaping up to be a full and unpredictable year, with a curious mix of responsible innovation/ethics training and consulting, lots of graphic and print design, increasing immersion into design futures and foresight, and a strong privacy design pipeline, alongside some good old fashioned IA, talks, panels, and mentoring.

A far cry from the frankly rather stagnant mainstream design work I was going a few years ago. Happy I chose to turn away from it, and grateful I had the privilege to be able to. Choose the interesting work, wherever you can.